This is my first blog post and its going to be about my activation function.

I made a activation function and its called Swish 2.0, its a improvement of the swish activation function. A video detailing what a neural network and a activation function is can be found here : https://youtu.be/bfmFfD2RIcg

A activation function is a important part of a neural network as it introduces non-linearity into the model and makes it more accurate. Various activation functions exist here is a list of some of them : https://en.m.wikipedia.org/wiki/Activation_function

So I experimented with activation functions and made my own one. It out performs a activation function called Swish which was made by Google.

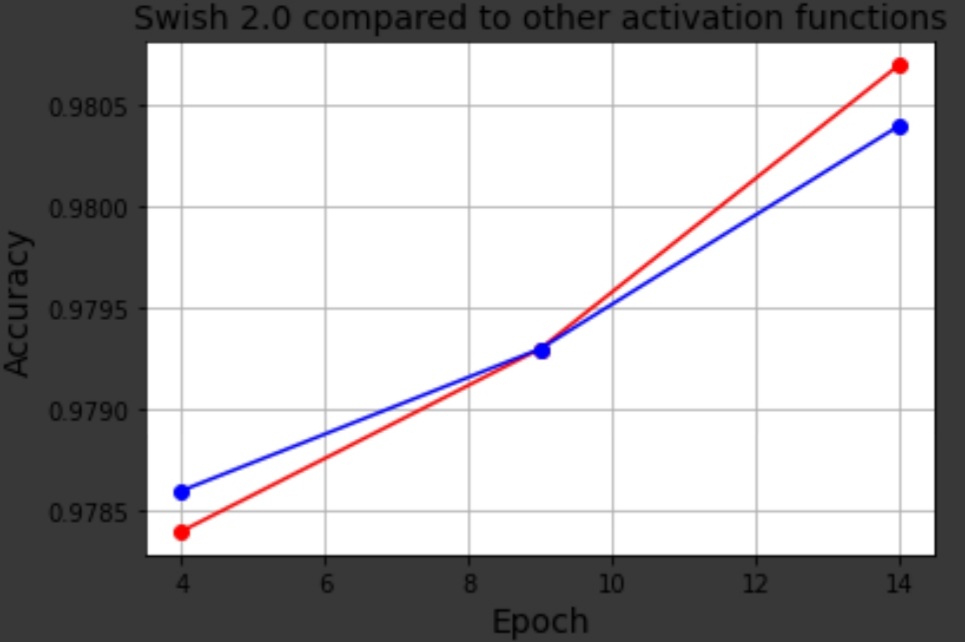

So as you can see in the graph above the red line rises over the blue one so my activation function is better.

My activation function is extremely similar to Swish but outperformed Swish.

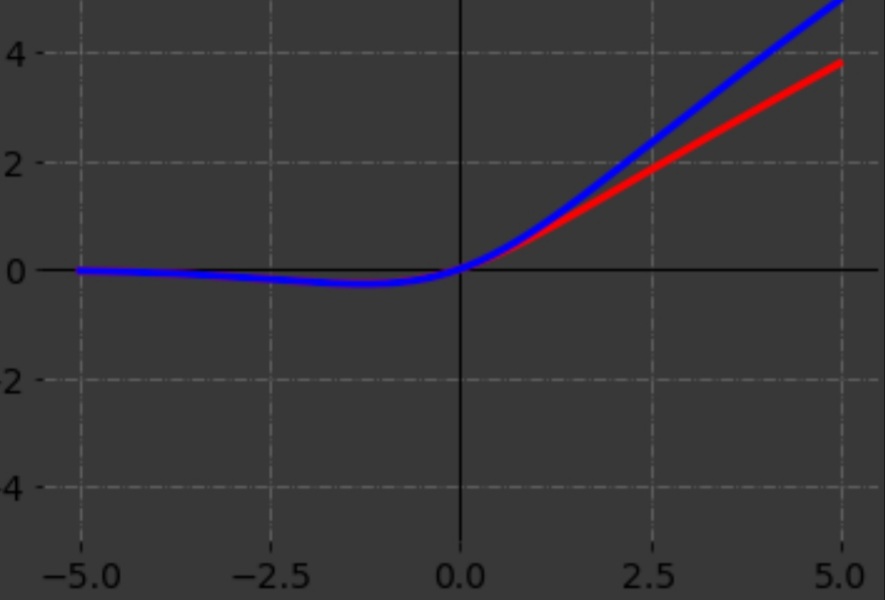

Activation functions can also be graphed, here is a graph of swish and my activation function :

The blue part of the graph above is swish and the red part is my activation function. As you can see on the left part of the graph the blue line and the red line align.

That means that swish and my activation function have about the same output for the numbers between -5.0 and 0.

But towards the right part of the screen the blue line and the red line diverge, meaning they begin to have different outputs

Swish 2.0 (my activation function) could improve the performance of neural networks across the globe.

By, Sahal Mulki